Increasing fpl intels lambda performance with gzip

How I implemented gzip compression in Rust AWS Lambdas to improve FPL Intel LightHouse performance score

Increasing FPL Intel's lambda performance with gzip

What's the issue?

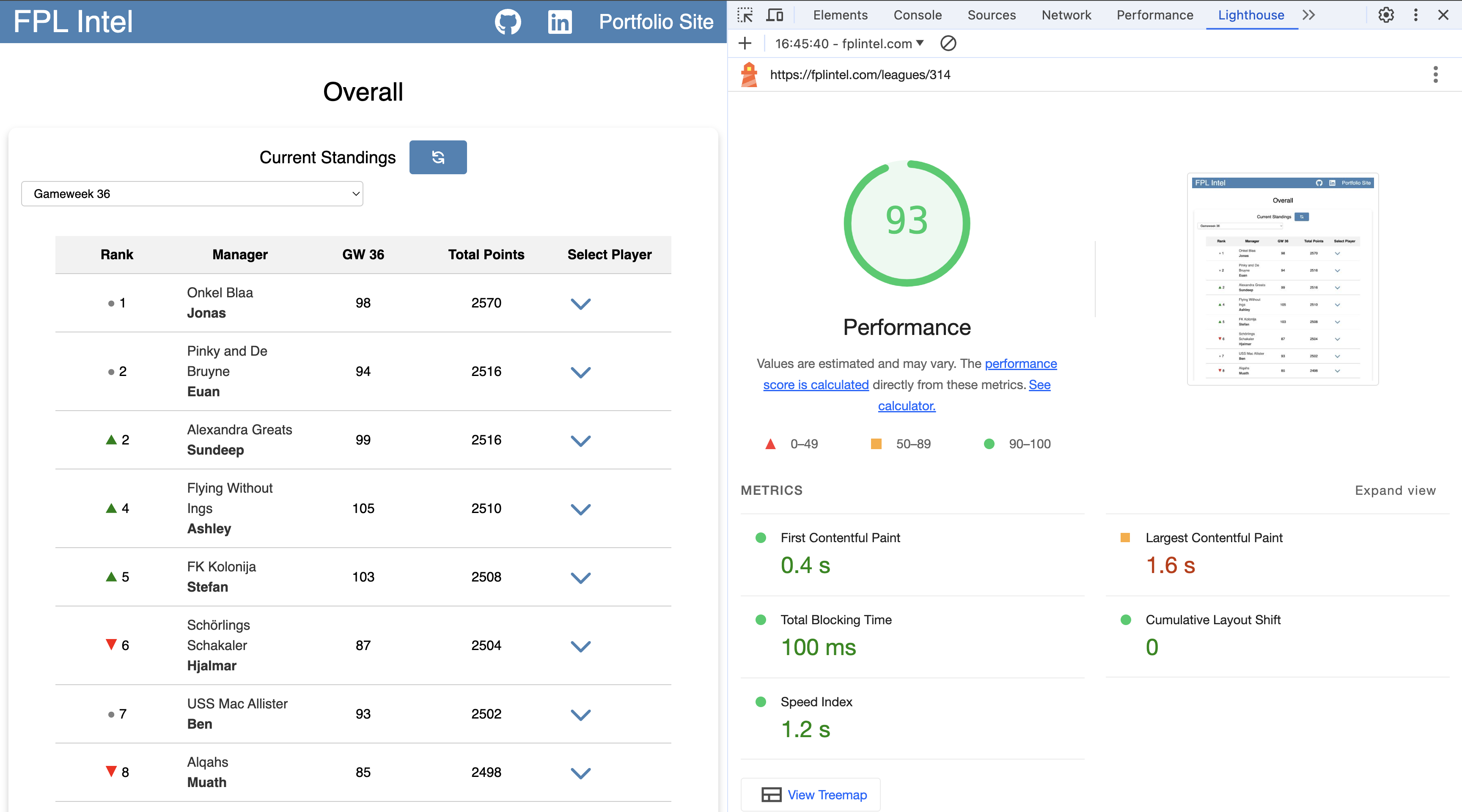

Over the course of this year's season I have noticed FPL intel getting slightly slower, so it was time to re-run LightHouse tests to see where any problems where.

The performance score looks good, but there were a couple improvements to be made.

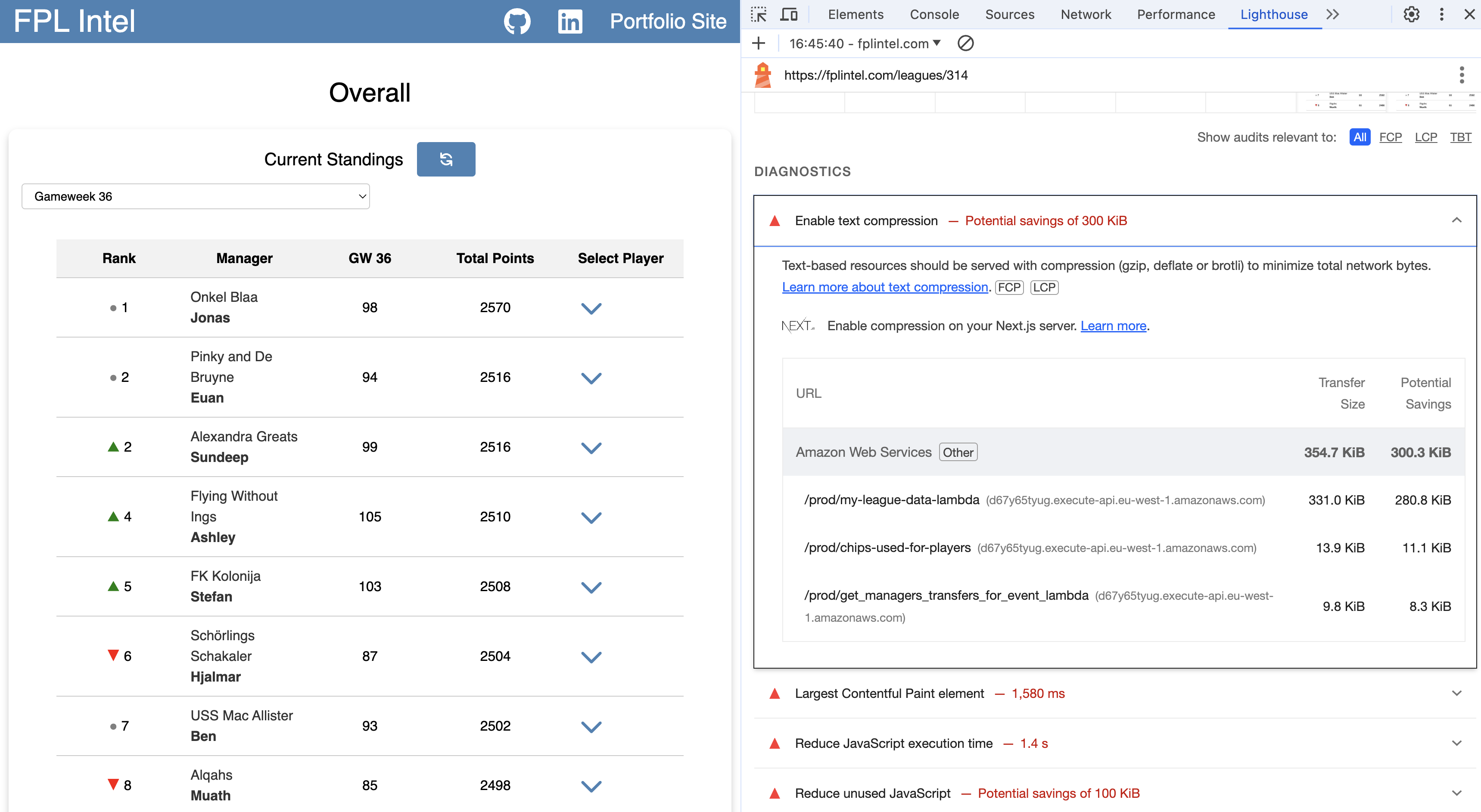

The focus of this post is the improvement on Enable text compression. This improvement has only been noticed towards the

end of the season because the data being returned by the lambda endpoints has grown with each gameweek. For example: for the

graph to be rendered, each player's stats for each gameweek is needed. With 38 gameweeks it adds up by the end.

So how to fix this?

I recommend reading the LightHouse docs.

LightHouse compresses text based resource types that do not have a content-heading set to br, gzip or deflate.

If the original response size is more than 1.4KiB and the potential saving is more thant 10% the original size, LightHouse

flags the response in the results.

Code

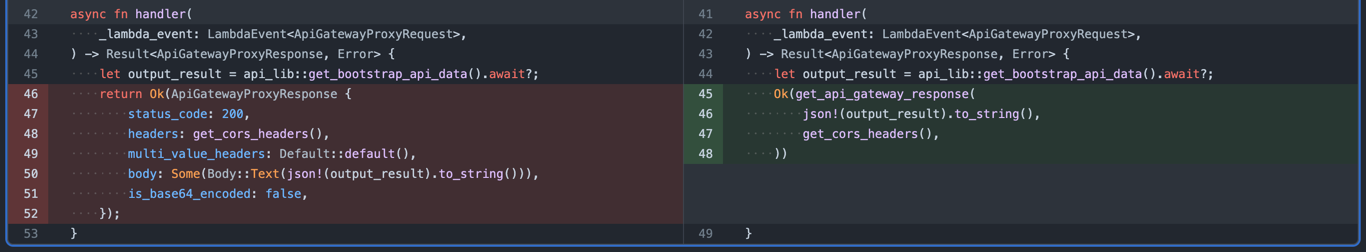

FPL Intel's lambdas are written in Rust. I decided to create a method in my lambda workspace that would handle all lambda responses. This would make all lambda's benefit from this change without repeating any code.

I used the flate2 crate to compress the response body with gzip compression when

the response is over 1000 bytes. I used Rust's match statement to handle any compression errors, if any occur the lambda

will just return the response body uncompressed.

1pub fn get_api_gateway_response( 2 response_body: String, 3 mut headers: HeaderMap, 4) -> ApiGatewayProxyResponse { 5 let (body, is_base64_encoded) = 6 get_response_body(response_body, &mut headers); 7 8 ApiGatewayProxyResponse { 9 status_code: 200, 10 headers, 11 multi_value_headers: Default::default(), 12 body, 13 is_base64_encoded, 14 } 15} 16 17fn get_response_body( 18 response_body: String, 19 headers: &mut HeaderMap, 20) -> (Option<Body>, bool) { 21 const BYTES_LENGTH_THRESHOLD: usize = 1000; 22 if response_body.as_bytes().len() > bytes_length_threshold { 23 let mut encoder = GzEncoder::new(Vec::new(), Compression::default()); 24 match encoder.write_all(response_body.as_bytes()) { 25 Ok(_) => match encoder.finish() { 26 Ok(compressed_body) => { 27 headers.insert("Content-Encoding", HeaderValue::from_static("gzip")); 28 (Some(Body::Text(base64::encode(compressed_body))), true) 29 } 30 Err(_) => (Some(Body::Text(response_body)), false), 31 }, 32 Err(_) => (Some(Body::Text(response_body)), false), 33 } 34 } else { 35 (Some(Body::Text(response_body)), false) 36 } 37}

Using this function let the lambda's handlers be simplified:

1async fn handler( 2 _lambda_event: LambdaEvent<ApiGatewayProxyRequest>, 3) -> Result<ApiGatewayProxyResponse, Error> { 4 let output_result = api_lib::get_bootstrap_api_data().await?; 5 Ok(get_api_gateway_response( 6 json!(output_result).to_string(), 7 get_cors_headers(), 8 )) 9}

Now all my lambda endpoints will benefit from compression with gzip!

Result

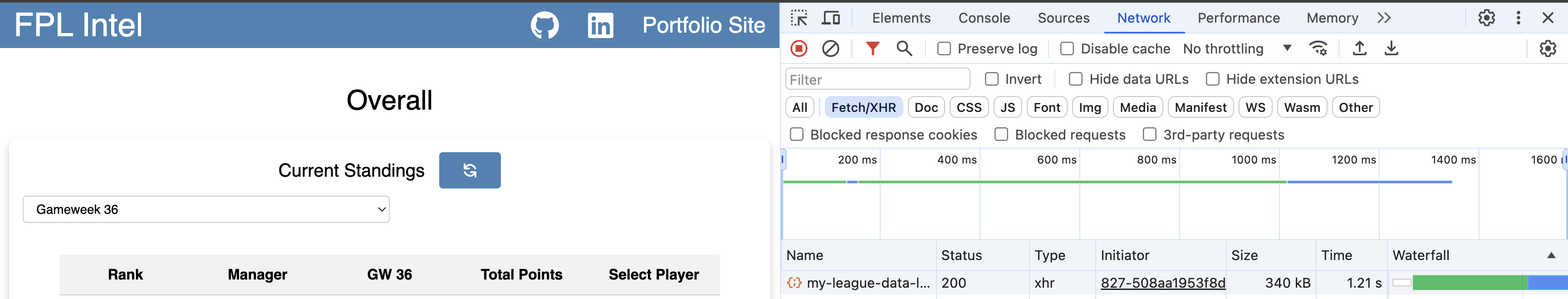

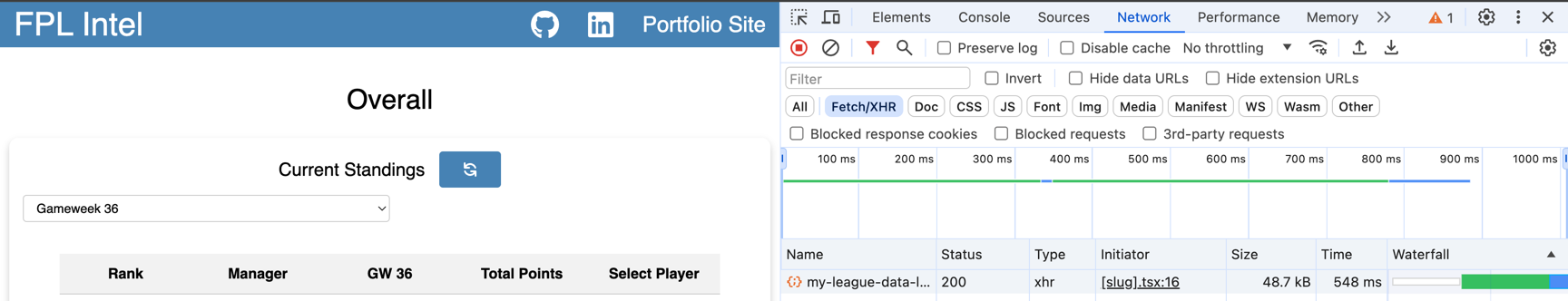

Largest Network Request Before:

Largest Network Request After:

As you can see there is a drastic reduction in the response size and a nice time saving.

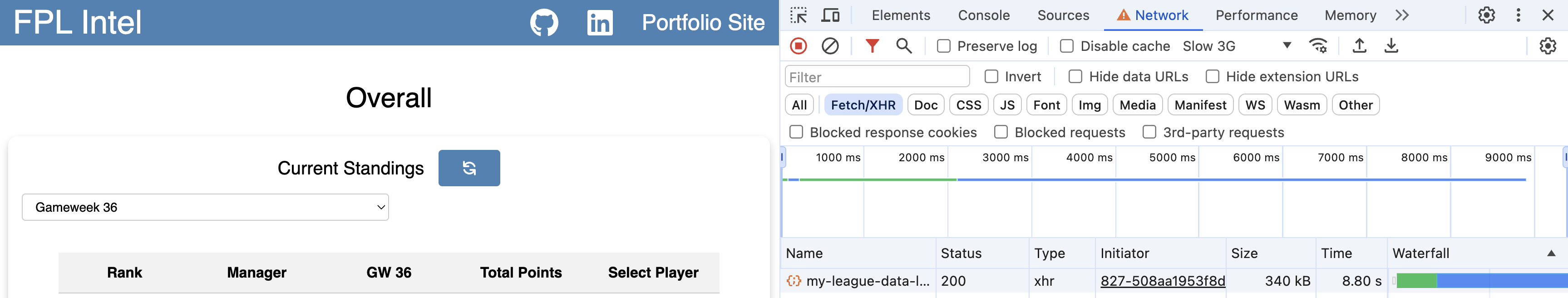

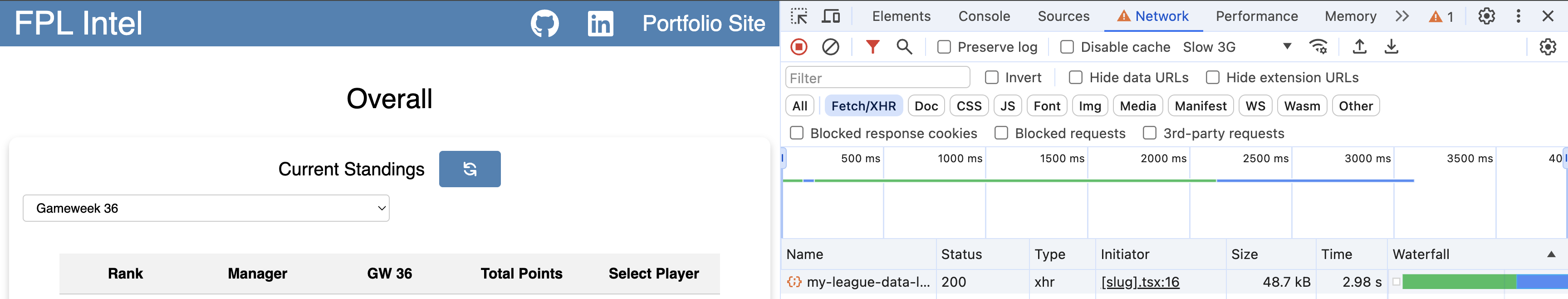

Compression isn't free. It takes time on the server to compress and time on the client to decompress. So the time saved

isn't as drastic as the bytes saved, but the slower the network the greater the difference. For example, below is the same

comparison but with throttling in Chrome's dev tools set to slow 3G.

Largest Network Request Before:

Largest Network Request After:

There's a much bigger improvement now! It is important to test using the throttling dev tool to make sure customers

with a worse network connection can still enjoy the service.

Still room to improve!